Documentation

Overview

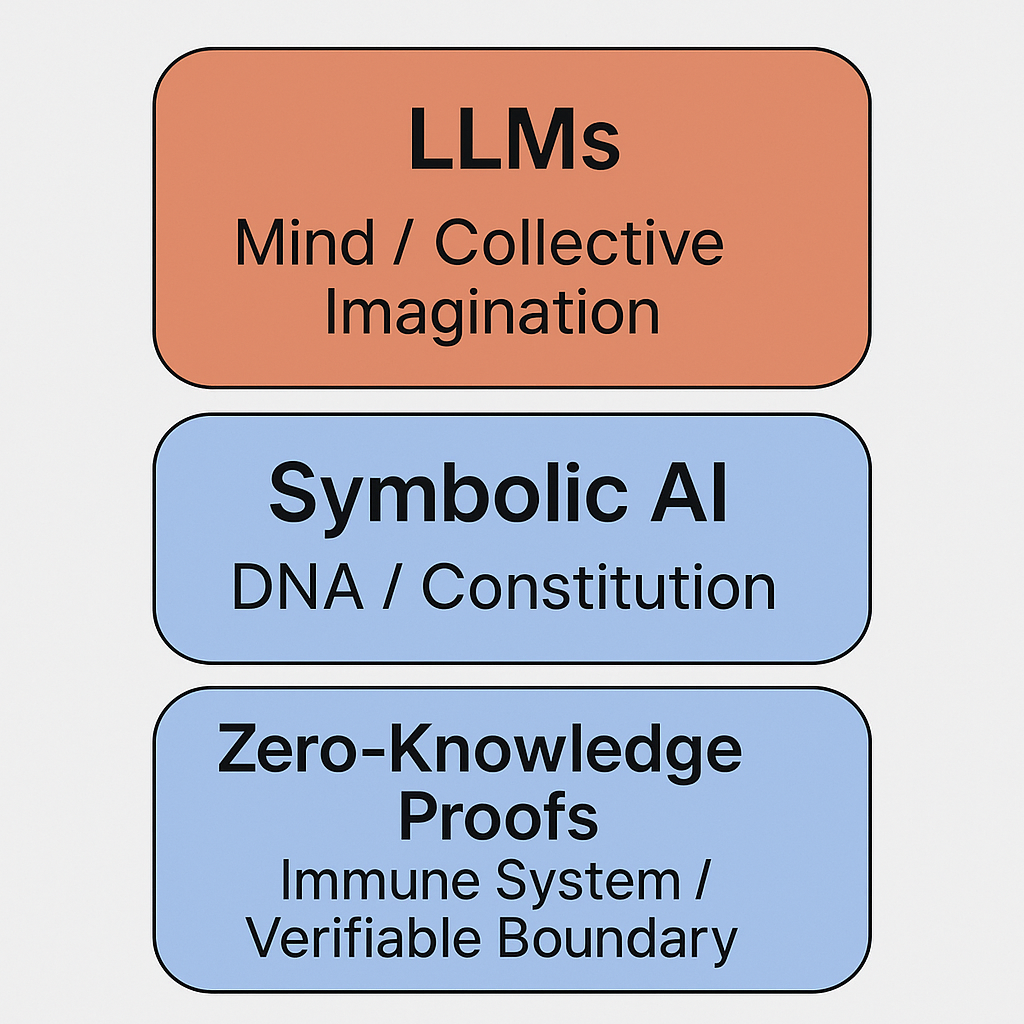

Skyla is a cryptographically verified symbolic AI agent demonstrating verifiable multi-model consensus and integrity measurement. It combines symbolic reasoning with Claude AI integration to create transparent, auditable AI behavior.

Currently Implemented:

- True 2-Model Consensus System (Claude Haiku vs Sonnet)

- Advanced architectural selection with quality scoring

- Symbolic state transitions with deterministic semantics

- Context-aware conversation memory (10 exchanges per session)

- Zero-knowledge proof generation for all state changes

- Real-time integrity measurement and divergence analysis

Prospective Features:

- Full cryptographic verification infrastructure

- Production-ready API endpoints

- Multi-agent coordination systems

Architecture

Skyla's architecture implements a multi-model consensus system with architectural diversity:

Multi-Model Consensus Layer

The system uses two distinct Claude architectures for diversity:

- Claude Haiku: Efficiency-focused, optimized for speed and conciseness

- Claude Sonnet: Quality-focused, optimized for nuance and depth

Advanced Selection Algorithm

The system evaluates responses using 5 sophisticated metrics:

- Adaptive Length Scoring: Optimal response length based on query complexity

- Nuance Detection: Identifies philosophical and analytical concepts

- Efficiency Indicators: Measures directness and clarity

- Semantic Richness: Evaluates vocabulary diversity

- Structural Coherence: Assesses sentence quality and organization

Architectural Bonuses

The system provides context-aware architectural preferences:

- Simple queries → +0.1 boost for Haiku (efficiency advantage)

- Complex queries → +0.1 boost for Sonnet (nuance advantage)

- Balanced queries → Merit-based selection with no bias

Multi-Model Consensus System

Status: Currently Implemented - This is the core innovation of Skyla's current implementation.

True Architectural Diversity

Unlike systems that use duplicate models, Skyla implements genuine consensus measurement between two different Claude architectures:

Dynamic Token Allocation

The system dynamically adjusts response length based on input complexity:

- Simple queries (≤50 chars): 400 tokens

- Medium queries (51-100 chars): 600 tokens

- Complex queries (>100 chars): 800 tokens

Query Complexity Analysis

The system automatically categorizes input complexity:

Integrity Measurement

The system measures genuine divergence between the two architectures across 4 dimensions:

- Length Variance: Response length differences

- Sentiment Divergence: Emotional tone variations

- Topic Divergence: Subject matter approach differences

- Tone Consistency: Communication style variations

Fair Selection Algorithm

The system eliminates bias through mathematical quality assessment:

Consensus Results

The system produces verifiable consensus measurements:

- High Integrity (>80%): Strong agreement between architectures

- Medium Integrity (50-80%): Moderate agreement with noted divergence

- Low Integrity (<50%): Significant disagreement, clarification requested

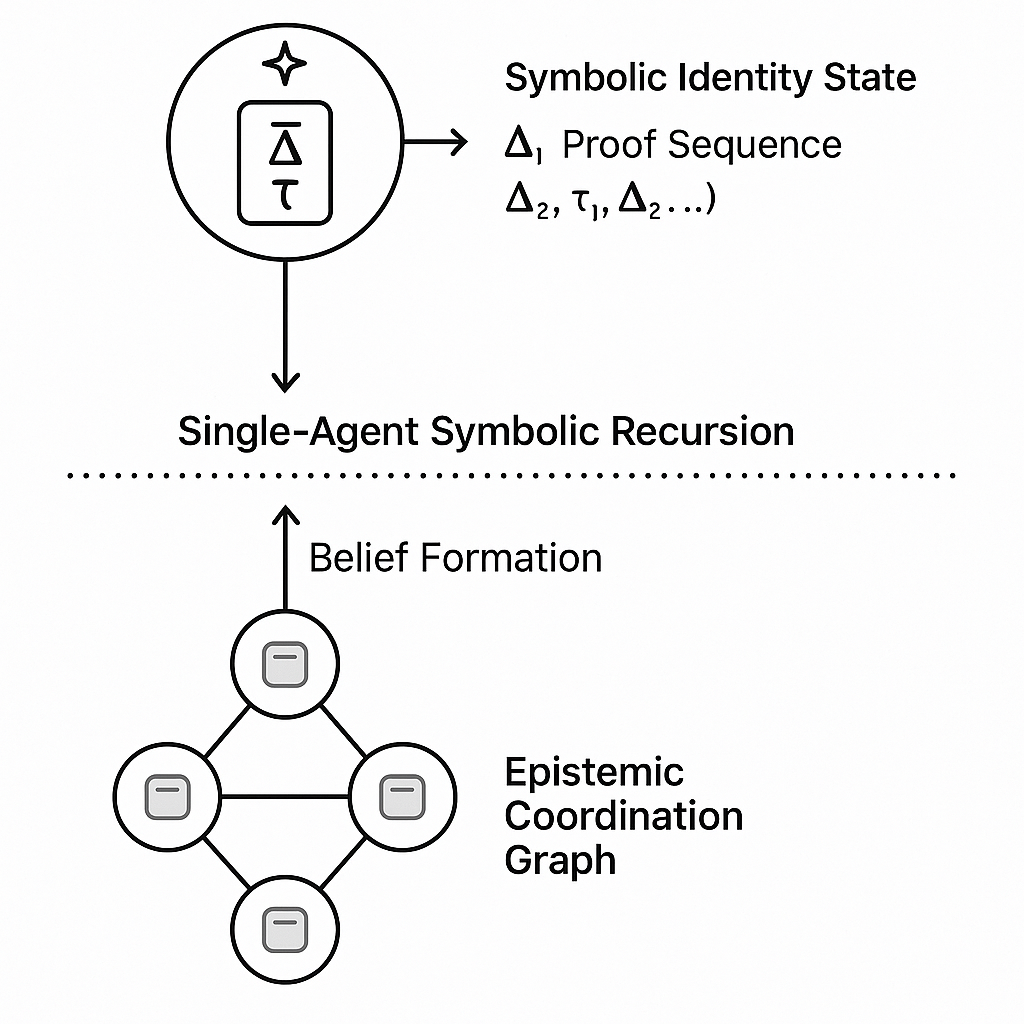

Identity Vector System

Status: Currently Implemented - Client-side state management with server-side context awareness.

Architecture Overview

Skyla's identity vector system implements a client-side state management architecture that ensures cryptographic verifiability and deterministic behavior.

4-Dimensional State Space

The identity vector represents agent state as: [cognitive, emotional, adaptive, coherence]

Client-Server Separation

The system maintains strict separation of concerns:

- Client Side: Computes all vector transitions using deterministic algorithms

- Server Side: Receives vector for contextual awareness, never modifies it

State Transition Processing

Data Flow

Cryptographic Benefits

This architecture provides:

- Verifiable Determinism: Same input always produces same vector change

- Mathematical Proof: All transitions can be independently verified

- No State Corruption: Server cannot accidentally modify agent state

- Transparency: All state logic visible in client-side code

Semantic Engine

Skyla's semantic engine processes natural language input through three layers of recognition, providing scalable pattern matching that maintains cryptographic integrity.

Processing Layers

The engine handles input through a hierarchical system that prioritizes exact symbolic matches, falls back to semantic patterns, then uses deterministic hashing for unknown inputs.

1. Exact Symbolic Layer

Direct matches for predefined symbolic triggers like daemon, spiral, build, or analyze. These trigger specific rule sets with predetermined state adjustments.

2. Semantic Pattern Matching

Regex-based categorization that recognizes natural language patterns and applies contextual vector adjustments:

3. Hash Fallback System

For inputs that don't match symbolic or semantic patterns, the system generates deterministic micro-adjustments using a hash function. This ensures consistent behavior for the same input while keeping changes within safe bounds (±0.05).

Scaling Properties

The semantic engine scales infinitely without rule explosion by allowing new regex patterns to be added without touching core logic. Every transition type (symbolic, semantic, or hash-based) generates valid ZK proofs maintaining cryptographic integrity.

Identity Vector Architecture

The Identity Vector System defines the mathematical foundation of Skyla's state management. This 4-dimensional vector space enables precise, deterministic state transitions while maintaining cryptographic verifiability.

Vector Structure

State Transition Mechanics

The system uses three-layer processing to ensure deterministic, mathematically consistent state changes:

- Symbolic Rules: Direct pattern matching for commands like "spiral", "daemon", "build"

- Semantic Patterns: Regex-based categorization with precise mathematical deltas

- Hash Fallback: Deterministic micro-adjustments (±0.05) for unknown inputs

Mode Transitions

State & proofs

Every state transition in Skyla generates a cryptographic proof that can be independently verified. This ensures complete transparency and auditability of agent behavior.

Proof Envelope Structure

API (Currently Available)

The Skyla API provides a working endpoint for multi-model consensus interaction.

Claude Consensus Endpoint

Identity Vector Context

The server uses the identity vector for contextual awareness only - it never modifies the vector:

Response Format

Planned Endpoints (Not Yet Implemented)

FAQ

What's actually implemented vs conceptual?

Currently Working: True 2-model consensus system with Claude Haiku vs Sonnet, advanced quality scoring, architectural selection, context memory, and integrity measurement. Conceptual: Full cryptographic verification, multi-agent systems / epistemic telemetry coordination.

How does the consensus system work?

Skyla sends each query to both Claude Haiku and Sonnet simultaneously, measures their response divergence across 4 dimensions, then selects the best response using a 5-factor quality scoring algorithm that considers query complexity and architectural strengths.

Why does model selection vary for the same input?

This is correct behavior! The system evaluates actual response content, not just input patterns. If Haiku produces a more nuanced response than Sonnet for a complex query, it rightfully wins. This proves the system measures real quality, not arbitrary preferences.

Can I see the selection reasoning?

Yes! Check the browser console during demo usage to see detailed quality scoring: 🎯 claude-3-haiku: Quality=0.823 (nuance=0.67, efficiency=0.00, bonus=0.00)

Is this actually measuring uncertainty?

Yes, Skyla demonstrates genuine uncertainty quantification through consensus measurement between architecturally different models. Unlike systems using duplicate models, this provides authentic diversity measurement.

How does the AI understand the identity vector?

The server includes the identity vector in the system prompt sent to Claude models, providing contextual awareness of the agent's current state without allowing modification of that state.

Is the system production-ready?

The multi-model consensus system is functional and demonstrates revolutionary AI integrity measurement. However, full cryptographic verification and production APIs are still in development.